Semantic technologies are technologies to work with semantics, in our case, meaning (message, signification) in the context of knowledge. This tutorial briefly introduces several of its aspects and refers to more material for interested readers.

When you would like to tell someone about something you know, you usually formulate a sentence, like for example:

Douglas Adams created The Hitchhiker's Guide to the Galaxy (sometimes referred to as HG2G) finally in the United Kingdom in 1979.

The sentence uses traditional grammar to structures various information:

| Grammatical Part | Grammatical Function | Example |

|---|---|---|

| Subject | person or thing about whom the statement is made | Douglas Adams |

| Predicate | a property that a subject has or is characterized by | created |

| Object | is any of several types of arguments | The Hitchhiker's Guide to the Galaxy |

| Parentheses | set off extra information within a sentence | (sometimes referred to as HG2G) |

| Manner | describes how and in what way the action of a verb is carried out | finally |

| Place | where it happens | in the United Kingdom |

| Time | when it happens | in 1979 |

To enable an artificial intelligence (AI) to also "know" what is mainly stated in the sentence, we should store only the three most important grammatical parts:

subject,

predicate and

object.

Because such statements like ("Douglas Adams", "created", "The Hitchhiker's Guide to the Galaxy") have always three parts, we also call them triples (or triple statements).

We often abbreviate the three parts with their first letter (s,p,o) (sometimes refer to them as SPO-triples).

You as a human with all your background knowledge and natural reasoning should be able to understand the sentence. However, our AI is not that smart and would wonder what exactly you mean (ambiguity, vagueness). In fact, there are serveral people named Douglas Adams like an American engineer or an American cricketer. Moreover, does "created" mean by artistic means or the cause to be or to become? And is "The Hitchhiker's Guide to the Galaxy" the novel or the film? Moreover, instead of just mentioning, we should specifically refer to what we mean without any ambiguity for the AI. There are many possibilities to implement this.

Since we would like to transfer knowledge from one AI system to another, we should do it in a way everybody is familiar with. To allow a group of people commit to a certain way, technical standards are usually defined. For example, this webpage was sent to you with the HyperText Transfer Protocol (HTTP), which both the server and your browser understand. To transfer knowledge in form of statements like shown above, it would be helpful to have a standardized way, too. Fortunately, the World Wide Web Consortium (W3C), the main international standards organization for the World Wide Web, already recommends several standards to give you and other researchers one standard way of working with knowledge.

The standards come from the idea, that the Web should not only be human-readable, but also machine-readable by adding semantic (= meaning) to webpages. This idea was coined Semantic Web. In the following is a comparison:

Web: "Hypertext Markup Language (HTML) is enough to present it to humans."

<div id="42">Douglas Adams created The Hitchhiker's Guide to the Galaxy <i>(sometimes referred to as <b>HG2G</b>)</i> finally in the United Kingdom in 1979.</div>Semantic Web: "why not also send statements to enable machines to process it?"

(Douglas Adams, created, The Hitchhiker's Guide to the Galaxy)

To avoid that everybody sends statements like on the right side in different ways, W3C defined a standard for it. Since the statements typically describe a web resource in an essential supporting structure (known as framework), it was named Resource Description Framework (RDF). Using it, we can express statements as RDF triples.

To also avoid that everybody refers to resources in different ways, the standard also defines a uniform (consistent, systematic) way to identify the resources. Therefore, you have to use Uniform Resource Identifiers (URIs) which define how your identifiers (character strings) have to be stuctured.

URI = scheme ":" ["//" authority] path ["?" query] ["#" fragment]There are related identifiers you may come in contact with, which are summarized in the following table.

| Identifier | Abbrev. | Function | Example |

|---|---|---|---|

| Uniform Resource Name | URN | you can name the resource, but not locate it | urn:isbn:0-486-27557-4 |

| Uniform Resource Locator | URL | you can locate the resource | https://en.wikipedia.org/wiki/Douglas_Adams |

| Uniform Resource Identifier | URI | generalizes URN and URL | data:text/plain;charset=iso-8859-7,%be%fa%be |

| Internationalized Resource Identifier | IRI | generalizes URI, expanding the set of permitted characters | https://en.wiktionary.org/wiki/Ῥόδος |

Since URIs can get rather long and often share the same prefix (part of a string attached to a beginning of a string), there is the idea to have a compact representation of them: Compact URI (CURIE).

This way, we define only once a prefix named wiki: for https://en.wikipedia.org/wiki/ and can write from then on short versions, e.g.

wiki:Douglas_Adams.

There are software libraries to develop with RDF. In this document, we support two programming languages:

Java 17 developers can use the latest  Jena library.

Jena library.

Add the dependency to your Maven POM project.

<dependency>

<groupId>org.apache.jena</groupId>

<artifactId>jena-core</artifactId>

<version>4.7.0</version>

</dependency>

Java 8 developers can use the  Jena library version 3.17.0.

Jena library version 3.17.0.

<dependency>

<groupId>org.apache.jena</groupId>

<artifactId>jena-core</artifactId>

<version>3.17.0</version>

</dependency>

Python 3 developers can use the  rdflib library.

rdflib library.

Install the dependency with the package installer for Python (pip).

$ pip install rdflib

For educational purpose the code is more explicit and rather long. Often the libraries provide shortcut methods which can be used by experienced developers. The reader is always encouraged to use the autocomplete feature of the IDE to discover more methods (functions).

Use the cheat sheet for an overview of the API in Jena.

Jena Cheat Sheet

Jena Cheat Sheet

To store RDF statements, also known as triples, you need a triplestore. Just consider the data model as a mathematical set of triple entries (i.e. without duplicates) where you can add and remove triple statements (atoms). Since their subjects and objects can also be interpreted as nodes in a directed edge-labelled graph, it is also named an RDF graph. Because the statements express some knowledge, it is also called knowledge graph and because data is interlinked, it is also called linked data.

import org.apache.jena.rdf.model.Model;

import org.apache.jena.rdf.model.ModelFactory;

Model model = ModelFactory.createDefaultModel();from rdflib import Graph

g = Graph()

Because we have to use URIs, they need to be created first. At the predicate position only a certain kind of resource is considered: a resource describing a property.

import org.apache.jena.rdf.model.ResourceFactory;

import org.apache.jena.rdf.model.Resource;

import org.apache.jena.rdf.model.Property;

Resource subject = ResourceFactory.createResource("https://en.wikipedia.org/wiki/Douglas_Adams"); //or model.createResource

Property predicate = ResourceFactory.createProperty("http://wordnet-rdf.princeton.edu/id/01643749-v"); //or model.createProperty

Resource object = ResourceFactory.createResource("https://en.wikipedia.org/wiki/The_Hitchhiker%27s_Guide_to_the_Galaxy_(novel)");

from rdflib import URIRef

subject = URIRef('https://en.wikipedia.org/wiki/Douglas_Adams')

predicate = URIRef('http://wordnet-rdf.princeton.edu/id/01643749-v')

obj = URIRef('https://en.wikipedia.org/wiki/The_Hitchhiker%27s_Guide_to_the_Galaxy_(novel)')

If you really do not have resolvable (web) addresses, but need ad hoc URIs for your resources, consider creating URNs with Universally Unique Identifiers (UUIDs) (and don't worry: they will never clash).

import java.util.UUID;

Resource res = ResourceFactory.createResource("urn:uuid:" + UUID.randomUUID().toString());

import uuid

res = URIRef('urn:uuid:' + str(uuid.uuid4()))

Now, we can add and remove triples in the graph. If you would like to change only subject, predicate or object in a triple, you have to remove the triple completely and add the changed one, since triples are atomic.

model.add(subject, predicate, object);

model.remove(subject, predicate, object);

g.add((subject, predicate, obj))

g.remove((subject, predicate, obj))

Ok, but how can we store data values like names, numbers and dates? Only at object position you are also allowed to store any kind of character string. Since this is not a resource, you cannot make statements about it, which is why we call it literal (cf. terminal symbols in formal grammars).

import org.apache.jena.rdf.model.Literal;

Property label = ResourceFactory.createProperty("http://www.w3.org/2000/01/rdf-schema#label");

Literal name = ResourceFactory.createPlainLiteral("Douglas Adams"); //or model.createLiteral

model.add(subject, label, name);

from rdflib import Literal

label = URIRef('http://www.w3.org/2000/01/rdf-schema#label')

name = Literal('Douglas Adams')

g.add((subject, label, name))

Literals can have additional meta data:

import org.apache.jena.datatypes.xsd.XSDDatatype;

Literal publicationDate = ResourceFactory.createTypedLiteral("1979-10-12", XSDDatatype.XSDdate); //or model.createTypedLiteral

Literal japaneseName = ResourceFactory.createLangLiteral("ダグラス・アダムズ", "ja"); //or model.createLiteral

from rdflib.namespace import XSD

publicationDate = Literal("1979-10-12", datatype=XSD.date)

japaneseName = Literal('ダグラス・アダムズ', lang='ja')

Visit the wikipage about RPTU and make statements about this page using RDF: turn the information you find in the Infobox of the wikipage into statements. Pick appropriate properties (use this search engine to find existing ones) or invent your own by defining URNs. To refer to resources use their wikipedia links. Use datatypes and language tags for literals if suitable.

We can iterate over statements and optionally give a fixed subject, predicate or object (or a combination of them which is known as basic graph patterns [BGPs]).

for(Statement stmt : model.listStatements().toSet()) {

System.out.println(stmt);

}

for(Statement stmt : model.listStatements(subject, null, (RDFNode) null).toSet()) {

System.out.println(stmt);

}

for s, p, o in g:

print(s, p, o)

for s, p, o in g.triples((subject, None, None)):

print(s, p, o)

This is useful when we would like to simply lookup what is connected over one-hop. However, for more complex queries, we should use a dedicated query language (like SQL for databases). Fortunately, W3C standardized an RDF Query Language which is called SPARQL .

# a comment

SELECT *

{

?s ?p ?o

}

LIMIT 10

The basic idea is that ?-prefixed variables are iteratively bound with values for all possible matches in the graph (subgraph matching).

Learning all aspects of SPARQL takes some time, but it is worthwhile for working with RDF:

almost all triplestores implement SPARQL as the main interface to query and manipulate RDF data.

It is not uncommon that complex use cases result in long and complex queries.

With the libraries we can parse and execute SPARQL queries.

<dependency>

<groupId>org.apache.jena</groupId>

<artifactId>jena-arq</artifactId>

<version>4.7.0</version>

</dependency>

import org.apache.jena.query.QueryExecution;

import org.apache.jena.query.QueryExecutionFactory;

import org.apache.jena.query.QuerySolution;

import org.apache.jena.query.ResultSet;

import org.apache.jena.query.ResultSetFormatter;

QueryExecution qe = QueryExecutionFactory.create(queryStr, model);

ResultSet rs = qe.execSelect();

for(QuerySolution qs : ResultSetFormatter.toList(rs)) {

System.out.println(qs);

}

//or ResultSetFormatter.out(rs)

rs = g.query(queryStr)

for qs in rs:

print(qs)

Whenever you would like to transfer RDF data from one system to another, you can serialize and parse RDF documents in a certain format.

Since URIs can get rather long and often share the same prefix, we should define some prefix mappings first.

Note that an empty prefix name "" is also allowed.

Frequently used prefixes can be looked up at prefix.cc.

import org.apache.jena.shared.PrefixMapping;

import org.apache.jena.vocabulary.XSD;

model.setNsPrefix("wiki", "https://en.wikipedia.org/wiki/");

model.setNsPrefix("wn", "http://wordnet-rdf.princeton.edu/id/");

model.setNsPrefix("xsd", XSD.NS);

//contains frequently used prefixes

PrefixMapping standard = PrefixMapping.Standard;

//CURIE <-> URI

String uri = model.expandPrefix("wiki:Douglas_Adams");

String curie = model.shortForm("https://en.wikipedia.org/wiki/Douglas_Adams");

g.bind("wiki", URIRef("https://en.wikipedia.org/wiki/"))

g.bind("wn", URIRef("http://wordnet-rdf.princeton.edu/id/"))

g.bind("xsd", XSD)

The following table lists frequently used serialization formats, ordered by decreasing importance for us.

| Language | Abbrev. | Extended from | Main Features | Mime-Type | File Ext. | Jena | rdflib |

|---|---|---|---|---|---|---|---|

| Terse RDF Triple Language | Turtle, TTL | N-Triples | Human-friendly and human-readable due to the following features: @prefix directive; ; repeat subject-predicate, , repeat object; (…) lists; boolean, decimal, double, integer literals |

text/turtle |

*.ttl |

"TTL" |

'ttl' |

| N-Triples | NT | Line-based: one line for one triple; . indicates full stop |

application/n-triples |

*.nt |

"NT" |

'nt' |

|

| JavaScript Object Notation for Linked Data | JSON-LD | JSON | Uses JSON to encode triples; useful in JavaScript/Web context; drawback: triples are not clearly visible anymore | application/ld+json |

*.jsonld |

"JSON-LD" |

'json-ld' |

| Notation3 | N3 | Turtle | Adds more features to Turtle, but almost always we do not need them | text/n3;charset=utf-8 |

*.n3 |

"N3" |

'n3' |

| RDF/XML | RDF/XML | XML | Uses XML to express RDF data; not human-friendly: not particularly readable and writable for humans | application/rdf+xml |

*.rdf |

"RDF/XML" |

'pretty-xml' |

The libraries allow you to write and read these formats (always use UTF-8 encoding).

StringWriter sw = new StringWriter();

model.write(sw, "TTL"); //or "NT", "JSON-LD", "N3", "RDF/XML"

System.out.println(sw.toString());

model.read(new FileReader(new File("data.ttl")), null, "TTL");

print(g.serialize(format='ttl')) # or 'nt', 'json-ld', 'n3', 'pretty-xml'

g.parse('data.ttl', format='ttl')

Parse and serialize single RDF nodes in the following way:

import org.apache.jena.sparql.util.NodeFactoryExtra;

import org.apache.jena.graph.Node;

import org.apache.jena.rdf.model.RDFNode;

Node node = NodeFactoryExtra.parseNode("\"literal\"^^<urn:type>");

RDFNode rdfNode = model.asRDFNode(node);

private static String toString(RDFNode rdfNode) {

boolean r = rdfNode.isResource();

return (r?"<":"") + rdfNode.asNode().toString(true) + (r?">":"");

}

Below, all the formats represent the exact same RDF data (triples), however in very different syntaxes. You can always convert between them.

@prefix wiki: <https://en.wikipedia.org/wiki/> .

@prefix wn: <http://wordnet-rdf.princeton.edu/id/> .

@prefix xsd: <http://www.w3.org/2001/XMLSchema#> .

wiki:Douglas_Adams <http://www.w3.org/2000/01/rdf-schema#label>

"Douglas Adams" , "ダグラス・アダムズ"@ja ;

wn:01643749-v wiki:The_Hitchhikers_Guide_to_the_Galaxy_novel .

wiki:The_Hitchhikers_Guide_to_the_Galaxy_novel

<http://purl.org/dc/terms/dateSubmitted>

"1979-10-12"^^xsd:date .

<https://en.wikipedia.org/wiki/Douglas_Adams> <http://www.w3.org/2000/01/rdf-schema#label> "Douglas Adams" .

<https://en.wikipedia.org/wiki/Douglas_Adams> <http://www.w3.org/2000/01/rdf-schema#label> "ダグラス・アダムズ"@ja .

<https://en.wikipedia.org/wiki/Douglas_Adams> <http://wordnet-rdf.princeton.edu/id/01643749-v> <https://en.wikipedia.org/wiki/The_Hitchhikers_Guide_to_the_Galaxy_novel> .

<https://en.wikipedia.org/wiki/The_Hitchhikers_Guide_to_the_Galaxy_novel> <http://purl.org/dc/terms/dateSubmitted> "1979-10-12"^^<http://www.w3.org/2001/XMLSchema#date> .

{

"@context" : {

"dateSubmitted" : {

"@id" : "http://purl.org/dc/terms/dateSubmitted",

"@type" : "http://www.w3.org/2001/XMLSchema#date"

},

"label" : {

"@id" : "http://www.w3.org/2000/01/rdf-schema#label"

},

"01643749-v" : {

"@id" : "http://wordnet-rdf.princeton.edu/id/01643749-v",

"@type" : "@id"

},

"wiki" : "https://en.wikipedia.org/wiki/",

"wn" : "http://wordnet-rdf.princeton.edu/id/",

"xsd" : "http://www.w3.org/2001/XMLSchema#"

},

"@graph" : [ {

"@id" : "wiki:Douglas_Adams",

"01643749-v" : "wiki:The_Hitchhikers_Guide_to_the_Galaxy_novel",

"label" : [

"Douglas Adams",

{

"@language" : "ja",

"@value" : "ダグラス・アダムズ"

}

]

}, {

"@id" : "wiki:The_Hitchhikers_Guide_to_the_Galaxy_novel",

"dateSubmitted" : "1979-10-12"

} ]

}

@prefix wiki: <https://en.wikipedia.org/wiki/> .

@prefix wn: <http://wordnet-rdf.princeton.edu/id/> .

@prefix xsd: <http://www.w3.org/2001/XMLSchema#> .

wiki:Douglas_Adams <http://www.w3.org/2000/01/rdf-schema#label>

"Douglas Adams" , "ダグラス・アダムズ"@ja ;

wn:01643749-v wiki:The_Hitchhikers_Guide_to_the_Galaxy_novel .

wiki:The_Hitchhikers_Guide_to_the_Galaxy_novel

<http://purl.org/dc/terms/dateSubmitted>

"1979-10-12"^^xsd:date .

<rdf:RDF

xmlns:rdf="http://www.w3.org/1999/02/22-rdf-syntax-ns#"

xmlns:j.0="http://purl.org/dc/terms/"

xmlns:wiki="https://en.wikipedia.org/wiki/"

xmlns:wn="http://wordnet-rdf.princeton.edu/id/"

xmlns:j.1="http://wordnet-rdf.princeton.edu/id/01643749-"

xmlns:rdfs="http://www.w3.org/2000/01/rdf-schema#"

xmlns:xsd="http://www.w3.org/2001/XMLSchema#">

<rdf:Description rdf:about="https://en.wikipedia.org/wiki/Douglas_Adams">

<rdfs:label xml:lang="ja">ダグラス・アダムズ</rdfs:label>

<rdfs:label>Douglas Adams</rdfs:label>

<j.1:v>

<rdf:Description rdf:about="https://en.wikipedia.org/wiki/The_Hitchhikers_Guide_to_the_Galaxy_novel">

<j.0:dateSubmitted rdf:datatype="http://www.w3.org/2001/XMLSchema#date"

>1979-10-12</j.0:dateSubmitted>

</rdf:Description>

</j.1:v>

</rdf:Description>

</rdf:RDF>

Download University0_0.nt.gz (14 MB), load it into a Model and print the number of statements.

Hint: Use GZIPInputStream.

Using University0_0.nt.gz (14 MB),

ub:firstname) is Shelly;ub:takesCourse);http://www.Department2.University0.edu/UndergraduateStudent358).

Put these statements in a new RDF model.

Set for http://www.Department0.University0.edu/ the prefix dp0 and do this also for the other departments (0 to 14).

Print the model in Turtle syntax.

Hint: ub: is not a prefix of a CURIE, it is the schema part of a URI.

Using University0_0.nt.gz (14 MB), formulate and run SPARQL queries for the following requests:

<ub:fullname>) in alphabetical order who took the course (ub:takesCourse) with the name (ub:name) Course46.<ub:Course>).<ub:Publication>) by name (<ub:name>) without duplicates which mention in their text (<ub:publicationText>) the word hypothesis.<ub:teacherOf>) full professors (<ub:FullProfessor>) or assistant professors (<ub:AssistantProfessor>).

Print the parsed query and its result (using ResultSetFormatter).

Hint: in Java 17 you can use multiline strings """ (three double quote marks).

Interpret and explain in your own words what the following RDF statements written in Turtle mean.

@prefix : <http://www.dfki.uni-kl.de/~mschroeder/example.ttl#> .

:University_of_Kaiserslautern

a :Research_University ;

:commonly_referred_to "TU Kaiserslautern"@de , "TUK" ;

:located_in :Kaiserslautern ,

:Germany .

:Kaiserslautern a :City ;

:located_in

:Germany .

:Germany a :Country .

:Arnd_Poetzsch_Heffter :presidentOf :University_of_Kaiserslautern .

:University_of_Kaiserslautern :faculties ( :Architecture :Biology :Chemistry ) .

While a set of RDF triples form an RDF Graph, a set of RDF graphs form an RDF Dataset. This is done by extending triples to quadruples (a.k.a. Quads, i.e. they consist of four parts). The fourth part states in which RDF graph the triple exists.

import org.apache.jena.query.Dataset;

import org.apache.jena.query.DatasetFactory;

Dataset dataset = DatasetFactory.create();

In order to put triples into graphs, we can use the unnamed (always existing) default graph (model) or we create graphs using URIs. Putting a triple in a graph just sets the fourth part of the quadruple to the graph's URI (for default graph it is null).

dataset.getDefaultModel().add(s, p, o);

dataset.getNamedModel("urn:graph:g1").add(s, p, o);

To read and write (I/O) RDF datasets, there are the following serialization formats.

| Language | Abbrev. | Extended from | Main Features | Mime-Type | File Ext. | Jena | rdflib |

|---|---|---|---|---|---|---|---|

| TriG (probably Triple Graph) | Turtle | Like Turtle, except adding Graphs with {...} syntax | application/trig |

*.trig |

"TriG" |

'trig' |

|

| N-Quads | NQ | N-Triples | Like N-Triples, except fourth component is graph URI | application/n-quads |

*.nq |

"NQ" |

'nquads' |

import org.apache.jena.riot.Lang;

import org.apache.jena.riot.RDFDataMgr;

RDFDataMgr.write(sw, dataset, Lang.NQ);

Datasets support transactions.

Use the Txn utility class to run read and write operations in transactions.

While execute methods just execute a Runnable in a transaction, calculate methods

allow to return a calculated value at the end of the transaction.

import org.apache.jena.system.Txn;

Dataset datasetTxn = DatasetFactory.createTxnMem();

Txn.executeRead(datasetTxn, () -> {

datasetTxn.getDefaultModel().listStatements();

});

Txn.executeWrite(datasetTxn, () -> {

datasetTxn.getDefaultModel().add(s,p,o);

});

int i = Txn.calculateRead(datasetTxn, () -> {

return datasetTxn.getDefaultModel().listStatements().toList().get(0).getInt();

});

boolean b = Txn.calculateWrite(datasetTxn, () -> {

datasetTxn.getDefaultModel().add(s,p,o);

return true;

});

Until now, we created models and datasets in memory. To have a persistent RDF store on harddrive we can use Jena's TDB (Triple DataBase). Remember to run every operation in a transaction.

<dependency>

<groupId>org.apache.jena</groupId>

<artifactId>jena-tdb2</artifactId>

<version>4.7.0</version>

</dependency>

import org.apache.jena.tdb2.TDB2Factory;

Dataset tdb = TDB2Factory.connectDataset("tdb2");

tdb.executeRead(() -> {

boolean result = QueryExecutionFactory.create("ASK { ?s ?p \"l\" }", dataset).execAsk();

});

tdb.executeWrite(() -> {

dataset.getDefaultModel().removeAll(s, p, null);

});

tdb.close();

By the way, the connectDataset method creates (if not existing yet) in a folder named tdb2 files on disk (*.bpt, *.dat and *.idn) to store the following maps for fast subject, predicate, object and graph retrieval.

Using University0_0.nt.gz (14 MB), load the following triples into separated graphs in a TDB triplestore.

urn:graph:graduateStudents load all triples about graduate students (ub:GraduateStudent), except ...ub:takesCourse) which should be put in the named graph urn:graph:takesCourses.List all named graphs created in the TDB triplestore together with their number of statements. Write a SPARQL query which lists the course URIs of students named Elaine.

So far, we only made statements (facts) about persons or things with assertions (also known as the ABox part). However, we can also use RDF to make statements about a terminology (vocabulary of a domain of interest, also known as the TBox part). Since this defines what can possibly exist and how it relates in a domain, we call it Ontology (taken from philosophy: study of concepts such as existence, being, becoming, and reality).

In its simplest form, ontologies define two aspects:

The following table lists frequently used ontologies, ordered by decreasing importance for us.

| Ontology | Abbrev. / Prefix | Reference | Freq. Used |

|---|---|---|---|

|

Resource Description Framework (using RDF we describe the terminology of RDF) |

RDF / rdf

|

http://www.w3.org/1999/02/22-rdf-syntax-ns# Jena: import org.apache.jena.vocabulary.RDFrdflib: from rdflib.namespace import RDF

|

rdf:type rdf:Property rdf:Statement |

|

Resource Description Framework Schema (using RDF we describe a schema terminology) |

RDFS / rdfs

|

http://www.w3.org/2000/01/rdf-schema# Jena: import org.apache.jena.vocabulary.RDFSrdflib: from rdflib.namespace import RDFS

|

rdfs:Class rdfs:label rdfs:comment rdfs:subClassOf rdfs:domain rdfs:range rdfs:Resource |

|

XML Schema Definition |

XSD / xsd

|

http://www.w3.org/2001/XMLSchema# Jena: import org.apache.jena.vocabulary.XSDrdflib: from rdflib.namespace import XSD

|

xsd:integer xsd:double xsd:decimal xsd:boolean xsd:date xsd:dateTime |

|

Nepomuk Shared Desktop Ontologies (we often use the Personal Information Model Ontology) |

PIMO / pimo

|

http://www.semanticdesktop.org/ontologies/2007/11/01/pimo# Jena: (not available) rdflib: PIMO = Namespace("http://www.semanticdesktop.org/ontologies/2007/11/01/pimo#")

|

pimo:Person pimo:Organization pimo:Topic pimo:Event pimo:Meeting pimo:Project pimo:Task |

|

Schema.org |

SDO / sdo

|

https://schema.org/ Jena: (not available) rdflib: SDO = Namespace("https://schema.org/")

|

sdo:Movie sdo:Person |

|

Friend of a Friend |

FOAF / foaf

|

http://xmlns.com/foaf/0.1/ Jena: import org.apache.jena.sparql.vocabulary.FOAFrdflib: from rdflib.namespace import FOAF

|

foaf:Person foaf:firstName foaf:lastName foaf:Project |

|

Simple Knowledge Organization System |

SKOS / skos

|

http://www.w3.org/2004/02/skos/core# Jena: import org.apache.jena.vocabulary.SKOS;rdflib: from rdflib.namespace import SKOS

|

skos:Concept skos:broader skos:narrower |

|

Dublin Core Metadata Initiative |

DC / dc

|

http://purl.org/dc/elements/1.1/ Jena: import org.apache.jena.vocabulary.DC;rdflib: from rdflib.namespace import DC

|

dc:title dc:description dc:date dc:creator |

|

Web Ontology Language |

OWL / owl

|

http://www.w3.org/2002/07/owl# Jena: import org.apache.jena.vocabulary.OWL2;rdflib: from rdflib.namespace import OWL

|

owl:Thing owl:Class owl:NamedIndividual owl:sameAs owl:inverseOf |

When making assertions, we encourage you to reuse (refer to) as much classes and properties as possible from existing ontologies. This way, your statements can be easily interpreted by looking up (dereference) the already defined meanings. Use the search in linked open vocabularies to find more classes and properties in public ontologies. In order to look up ontology prefixes, use prefix.cc.

Resource douglasAdams = ResourceFactory.createResource("https://en.wikipedia.org/wiki/Douglas_Adams");

model.add(douglasAdams, RDF.type, FOAF.Person);

model.add(douglasAdams, RDF.type, OWL2.NamedIndividual);

model.add(douglasAdams, RDFS.label, "Douglas Adams");

model.add(douglasAdams, RDFS.comment, "An English author, screenwriter, essayist, humorist, satirist and dramatist.");

model.add(douglasAdams, DC.title, "Douglas Adams");

douglasAdams = URIRef("https://en.wikipedia.org/wiki/Douglas_Adams")

g.add((douglasAdams, RDF.type, FOAF.Person))

g.add((douglasAdams, RDF.type, OWL.NamedIndividual))

g.add((douglasAdams, RDFS.label, Literal("Douglas Adams")))

g.add((douglasAdams, RDFS.comment, Literal("An English author, screenwriter, essayist, humorist, satirist and dramatist.")))

g.add((douglasAdams, DC.title, Literal("Douglas Adams")))

Modelling an ontology from a domain of interest is called ontology engineering, while learning it from text more automatically is called ontology learning. Both are considered knowledge acquisition activities. This tutorial will not cover, how to model an ontology from a domain of interest.

Create an RDF model with statements about this website https://www.dfki.uni-kl.de/~mschroeder/tutorial/semtec.

Try using already existing classes and properties from code. Add appropriate prefixes and print the model in Turtle syntax.

Besides ontologies for certain domains, you can also make use of assertions from publicly available knowledge graphs. Since this linked data is usually open (i.e. freely available), such RDF datasets are summarized under the umbrella term Linked Open Data (Cloud). The domains include for example geography, government, life sciences, linguistics and media.

A notable knowledge graph is the RDF version of Wikipedia which is DBpedia. Its main idea is the mapping of infobox tables to RDF statements using its ontology.

@prefix dbo: <http://dbpedia.org/ontology/> .

@prefix dbr: <http://dbpedia.org/resource/> .

dbr:Douglas_Adams dbo:birthName "Douglas Noel Adams"@en ;

dbo:birthDate "1952-03-11"^^xsd:date ;

dbo:birthPlace dbr:Cambridge , dbr:Cambridgeshire ;

dbo:deathDate "2001-05-11"^^xsd:date ;

dbo:deathPlace <http://dbpedia.org/resource/Montecito,_California> ;

dbo:restingPlace dbr:Highgate_Cemetery , dbr:London ;

dbo:occupation dbr:Author , dbr:Screenwriter , dbr:Essay ,

dbr:List_of_humorists , dbr:List_of_satirists_and_satires , dbr:Playwright .

Another notable knowledge graph is Wikidata which is, in contrast to DBpedia, manually created by contributors. It can be queried with SPARQL or downloaded (see truthy dumps).

Usually, such knowledge graphs cover rather common knowledge of a certain domain, which is why they are less useful for personal knowledge graph scenarios.

Query DBpedia's SPARQL endpoint https://dbpedia.org/sparql using Java code.

Find fictional characters (dbo:FictionalCharacter) with their spouses (dbo:spouse).

Hint: Use QueryExecutionHTTP.

"Douglas Adams created The Hitchhiker's Guide to the Galaxy", claims Mark Carwardine.

This sentence has two statements.

This statement about a statement can be expressed in RDF with so-called RDF-star (or written RDF*)

which introduces quoted triples.

They are written with double angle brackets << ... >>.

@prefix wiki: <https://en.wikipedia.org/wiki/> .

@prefix wn: <http://wordnet-rdf.princeton.edu/id/> .

<< wiki:Douglas_Adams wn:01643749-v wiki:The_Hitchhikers_Guide_to_the_Galaxy_novel >> wn:00758383-v wiki:Mark_Carwardine .

Note that we see here only one statement which is the 'claim' statement.

The quoted triple is not part of the RDF graph since it is hidden in the (subject) resource.

It has to be repeated in unquoted way to be in the RDF graph as well.

Use on the resource getStmtTerm() to get the statement (i.e. quoted triple).

Jena supports RDF-star. You need Jena ARQ library for RDF I/O technology (RIOT) to parse and write RDF-star. It is supported in the following formats:

"TTL""NT"

Unfortunately, Version 3.17.0 does not support the {| ... |} annotation syntax.

StringWriter sw = new StringWriter();

model.write(sw, "TTL"); //or "NT"

System.out.println(sw.toString());

model.read(new FileReader(new File("data.ttl")), null, "TTL");

In rdflib there seems to be an ongoing implementation.

Since we are working with statements, we can also apply forms of logical reasoning in RDF.

This includes two parts: rules usually in form of [if premises then conclusions] (modus ponens) and facts in form of statements (premises and conclusions, depending on occurance in rules).

We distinguish the following three reasoning forms.

| Reasoning | What we have | What we get | Usage for us | |

|---|---|---|---|---|

|

Deduction

(inference) a.k.a. forward chaining (data-driven) |

Premises | Rules | Conclusions | We often infer new statements from existing statements |

| Induction ("(machine) learning") | Premises | Conclusions | Rules | We sometimes train machine learning models from knowledge graphs |

|

Abduction

("reasoning of detectives") a.k.a. backward chaining (goal-driven) |

Conclusions | Rules | Premises | We sometimes would like to check a deduction tree |

A rule engine can be used to perform forward and backward chaining.

Jena provides a general purpose rule engine.

For working with inferred statements use the InfModel interface.

The rule language in Jena follows a certain syntax.

In the example, we apply the rule that every person is also a resource in RDF, yet more complex rules can easily be defined, also including builtin functions.

import org.apache.jena.reasoner.rulesys.GenericRuleReasoner;

import org.apache.jena.reasoner.rulesys.Rule;

import org.apache.jena.rdf.model.InfModel;

String rules =

"@prefix rdf: <http://www.w3.org/1999/02/22-rdf-syntax-ns#>.\n" +

"@prefix rdfs: <http://www.w3.org/2000/01/rdf-schema#>.\n" +

"@prefix foaf: <http://xmlns.com/foaf/0.1/>.\n" +

"[ruleA: (?s rdf:type foaf:Person) -> (?s rdf:type rdfs:Resource)]";

GenericRuleReasoner reasoner = new GenericRuleReasoner(Rule.parseRules(rules));

InfModel infModel = ModelFactory.createInfModel(reasoner, model);

Model raw = infModel.getRawModel();

Model deduced = infModel.getDeductionsModel();

In rdflib there seems to be no rule engine implementation. As an alternative in Python, you may consider PyKE (Python Knowledge Engine). However, this requires to mediate between RDF and PyKE.

If logging is enabled, we can reproduce how certain statements are derived.

import org.apache.jena.reasoner.Derivation;

import org.apache.jena.reasoner.rulesys.RuleDerivation;

reasoner.setDerivationLogging(true);

Iterator<Derivation> iter = infModel.getDerivation(ResourceFactory.createStatement(

douglasAdams,

RDF.type,

RDFS.Resource));

while(iter.hasNext()) {

RuleDerivation ruleDerivation = (RuleDerivation) iter.next();

System.out.println(

ruleDerivation.getRule() + " " +

ruleDerivation.getMatches() + " " +

ruleDerivation.getConclusion()

);

}

Besides defining our own rule set, we can rely on already defined logic systems like description logic (DL).

Fortunately, as soon as we use RDFS and an RDFS reasoner, we get a DL variant.

This can also be included in a rule set with @include <RDFS>.

InfModel rdfsInfModel = ModelFactory.createRDFSModel(model);

This results in the following RDFS entailment rule applications.

| Name | If | Then |

|---|---|---|

|

"Everything is a resource, but properties are on predicate position" |

(x, p, y) |

(x, rdf:type, rdfs:Resource) (y, rdf:type, rdfs:Resource) |

(x, p, y) |

(p, rdf:type, rdf:Property) |

|

| "A class is a specific resource" |

(c, rdf:type, rdfs:Class) |

(c, rdfs:subClassOf, rdfs:Resource) |

| Class Hierarchy |

(c, rdf:type, rdfs:Class) |

(c, rdfs:subClassOf, c) |

(c, rdfs:subClassOf, d) (d, rdfs:subClassOf, e) |

(c, rdfs:subClassOf, e) |

|

(x, rdf:type, c) (c, rdfs:subClassOf, d) |

(x, rdf:type, d) |

|

| Property Hierarchy |

(p, rdf:type, rdf:Property) |

(p, rdfs:subPropertyOf, p) |

(p, rdfs:subPropertyOf, q) (q, rdfs:subPropertyOf, r) |

(p, rdfs:subPropertyOf, r) |

|

(x, p, y) (p, rdfs:subPropertyOf, q) |

(x, q, y) |

|

| Domain & Range |

(x, p, y) (p, rdfs:domain, d) |

(x, rdf:type, d) |

(x, p, y) (p, rdfs:range, r) |

(y, rdf:type, r) |

More expressive reasoning can be done with OWL (Lite < DL < Full), however we rather seldom use it due to its higher time complexity.

Consider the following incomplete RDF model:

@prefix : <http://www.dfki.uni-kl.de/~mschroeder/example.ttl#> .

:University_of_Kaiserslautern :commonly_referred_to "TU Kaiserslautern"@de , "TUK" ;

:located_in :Kaiserslautern .

:Kaiserslautern a :City ;

:located_in :Germany .

:Arnd_Poetzsch_Heffter :presidentOf :University_of_Kaiserslautern .

Define the following rules in a GenericRuleReasoner to deduce the missing statements.

Hint: Define appropriate prefixes and use rdf:type in your rules.

Often we have to work with semi-structured data sources such as CSV, XML or JSON which are not expressed in RDF. In such cases, we can map data to RDF statements in order to work with it using semantic technologies. Using a programming language to implement the mapping can take much effort and time. Therefore, a mapping language can be useful to define how sources shall be mapped to RDF. A popular one is the RDF Mapping Language (RML) in combination with an RML engine (RML processor, RML mapper).

RML Mapper is a Java implementation of an RML engine. Since it includes old Jena dependencies, we exclude them.

<dependency>

<groupId>be.ugent.rml</groupId>

<artifactId>rmlmapper</artifactId>

<version>4.9.0</version>

<exclusions>

<exclusion>

<groupId>org.apache.jena</groupId>

<artifactId>apache-jena-libs</artifactId>

</exclusion>

<exclusion>

<groupId>com.github.rdfhdt</groupId>

<artifactId>hdt-java</artifactId>

</exclusion>

</exclusions>

</dependency>

In rdflib there seems to be no RML engine implementation. As an alternative in Python, you may consider pyrml.

RML is also expressed with RDF (there is also a YAML syntax and an editor). Since RML is an extension of R2RML (mapping ralational databases), its concepts are often reused.

@prefix rr: <http://www.w3.org/ns/r2rml#>.

@prefix rml: <http://semweb.mmlab.be/ns/rml#>.

@prefix ql: <http://semweb.mmlab.be/ns/ql#>.

@prefix xsd: <http://www.w3.org/2001/XMLSchema#>.

@prefix foaf: <http://xmlns.com/foaf/0.1/>.

RML's basic idea is to refer to logical sources and define how subjects and their prediate-objects shall be mapped.

Use templates with variable references {…} or direct references.

We use BlankNode syntax […] to define resources without giving them URIs.

HG2G.csv Input Data

id,fn,ln,age,knows

1,Arthur,Dent,35,2

1,Arthur,Dent,35,3

2,Ford,Prefect,38,1

2,Ford,Prefect,38,3

3,Marvin,,4274936,1

3,Marvin,,4274936,2

The first row is usually the header. Use column names to refer to them.

RML Mapping File

@prefix : <file://data/HG2G-csv.rml.ttl#>.

:mapping a rr:TriplesMap;

rml:logicalSource [

rml:source "HG2G.csv" ;

rml:referenceFormulation ql:CSV

];

rr:subjectMap [

rr:template "file://data/HG2G.csv.ttl#{id}";

rr:class foaf:Person

];

rr:predicateObjectMap [

rr:predicate foaf:firstName;

rr:objectMap [

rml:reference "fn"

]

];

rr:predicateObjectMap [

rr:predicate foaf:lastName;

rr:objectMap [

rml:reference "ln"

]

];

rr:predicateObjectMap [

rr:predicate foaf:age;

rr:objectMap [

rml:reference "age";

rr:datatype xsd:int

]

];

rr:predicateObjectMap [

rr:predicate foaf:knows;

rr:objectMap [

rr:template "file://data/HG2G.csv.ttl#{knows}"

]

].

HG2G.xml Input Data

<hg2g>

<person id="1" fn="Arthur" ln="Dent" age="35">

<knows>2</knows>

<knows>3</knows>

</person>

<person id="2" fn="Ford" ln="Prefect" age="38">

<knows>1</knows>

<knows>3</knows>

</person>

<person id="3" fn="Marvin" age="4274936">

<knows>1</knows>

<knows>2</knows>

</person>

</hg2g>

Use XPath to iterate and refer to XML elements and attribute values.

RML Mapping File

@prefix : <file://data/HG2G-xml.rml.ttl#>.

:mapping a rr:TriplesMap;

rml:logicalSource [

rml:source "HG2G.xml" ;

rml:referenceFormulation ql:XPath;

rml:iterator "/hg2g/person";

];

rr:subjectMap [

rr:template "file://data/HG2G.xml.ttl#{@id}";

rr:class foaf:Person

];

rr:predicateObjectMap [

rr:predicate foaf:firstName;

rr:objectMap [

rml:reference "@fn"

]

];

rr:predicateObjectMap [

rr:predicate foaf:lastName;

rr:objectMap [

rml:reference "@ln"

]

];

rr:predicateObjectMap [

rr:predicate foaf:age;

rr:objectMap [

rml:reference "@age";

rr:datatype xsd:int

]

];

rr:predicateObjectMap [

rr:predicate foaf:knows;

rr:objectMap [

rr:template "file://data/HG2G.xml.ttl#{knows}"

]

].

HG2G.json Input Data

[

{

"id": "1",

"fn": "Arthur",

"ln": "Dent",

"age": 35,

"knows": [{ "id": "2" }, { "id": "3" }

]

},

{

"id": "2",

"fn": "Ford",

"ln": "Prefect",

"age": 38,

"knows": [{ "id": "1" }, { "id": "3" }

]

},

{

"id": "3",

"fn": "Marvin",

"ln": "",

"age": 4274936,

"knows": [{ "id": "1" }, { "id": "2" }

]

}

]

Use JSONPath to iterate and refer to JSON elements and values.

RML Mapping File

@prefix : <file://data/HG2G-json.rml.ttl#>.

:mapping a rr:TriplesMap;

rml:logicalSource [

rml:source "HG2G.json" ;

rml:referenceFormulation ql:JSONPath;

rml:iterator "$.*";

];

rr:subjectMap [

rr:template "file://data/HG2G.json.ttl#{id}";

rr:class foaf:Person

];

rr:predicateObjectMap [

rr:predicate foaf:firstName;

rr:objectMap [

rml:reference "fn"

]

];

rr:predicateObjectMap [

rr:predicate foaf:lastName;

rr:objectMap [

rml:reference "ln"

]

];

rr:predicateObjectMap [

rr:predicate foaf:age;

rr:objectMap [

rml:reference "age";

rr:datatype xsd:int

]

];

rr:predicateObjectMap [

rr:predicate foaf:knows;

rr:objectMap [

rr:template "file://data/HG2G.json.ttl#{knows.*.id}"

]

].

In Java we use RML Mapper to perform the mapping.

import be.ugent.rml.Executor;

import be.ugent.rml.functions.FunctionLoader;

import be.ugent.rml.records.RecordsFactory;

import be.ugent.rml.store.QuadStore;

import be.ugent.rml.store.RDF4JStore;

import org.eclipse.rdf4j.rio.RDFFormat;

RDF4JStore rmlStore = new RDF4JStore();

rmlStore.read(new FileInputStream(mappingFile), null, RDFFormat.TURTLE);

RecordsFactory factory = new RecordsFactory(mappingFile.getParentFile().getAbsolutePath());

QuadStore outputStore = new RDF4JStore();

FunctionLoader functionLoader = new FunctionLoader();

Executor executor = new Executor(rmlStore, factory, functionLoader, outputStore, null);

QuadStore qs = executor.execute(null);

qs.write(new FileWriterWithEncoding(outFile, StandardCharsets.UTF_8), "turtle");However, often we have to transform (convert) data, since it is not in the shape we need it. Therefore, RML allows us to invoke functions from a programming language during the mapping.

First, we implement a static method in Java in a separate project and build a JAR file functions-1.0.0-SNAPSHOT.jar.

package de.uni.kl.dfki.mschroeder.tutorial.semtec;

import java.time.LocalDateTime;

public class Functions {

public static Integer getYear(Integer age) {

return LocalDateTime.now().getYear() - age;

}

}Second, we have to describe the function using the Function Ontology (FnO). This definition can be automatically generated using Java reflections.

@prefix fnoi: <https://w3id.org/function/vocabulary/implementation#> .

@prefix fno: <https://w3id.org/function/ontology#> .

@prefix fnom: <https://w3id.org/function/vocabulary/mapping#> .

@prefix fnml: <http://semweb.mmlab.be/ns/fnml#> .

@prefix xsd: <http://www.w3.org/2001/XMLSchema#> .

@prefix rdfs: <http://www.w3.org/2000/01/rdf-schema#> .

<java:de.uni.kl.dfki.mschroeder.tutorial.semtec.Functions.getYear>

a fno:Function ;

rdfs:label "getYear" ;

fno:expects ( <java:parameter.integer.0> ) ;

fno:returns ( <java:return.integer> ) .

<java:de.uni.kl.dfki.mschroeder.tutorial.semtec.Functions>

a fnoi:JavaClass ;

<http://usefulinc.com/ns/doap#download-page>

"functions/target/functions-1.0.0-SNAPSHOT.jar" ; # note: has to be an absolute path

fnoi:class-name "de.uni.kl.dfki.mschroeder.tutorial.semtec.Functions" .

[ a fno:Mapping ;

fno:function <java:de.uni.kl.dfki.mschroeder.tutorial.semtec.Functions.getYear> ;

fno:implementation <java:de.uni.kl.dfki.mschroeder.tutorial.semtec.Functions> ;

fno:methodMapping [ a fnom:StringMethodMapping ;

fnom:method-name "getYear"

]

] .

<java:parameter.integer.0>

a fno:Parameter ;

rdfs:label "Input Integer 0" ;

fno:predicate <java:parameter.predicate.integer.0> ;

fno:required true ;

fno:type xsd:int .

<java:return.integer>

a fno:Output ;

rdfs:label "Output Integer" ;

fno:predicate <java:return.predicate.integer> ;

fno:type xsd:int .The function definition has to be loaded.

QuadStore fnoQuadStore = new RDF4JStore();

fnoQuadStore.read(new FileInputStream(fnoFile), null, RDFFormat.TURTLE);

FunctionLoader functionLoader = new FunctionLoader(fnoQuadStore);This way we can use it in the mapping.

@prefix dbo: <https://dbpedia.org/ontology/> .

@prefix : <file://data/HG2G-csv-fno.rml.ttl#>.

:mapping a rr:TriplesMap;

rml:logicalSource [

rml:source "HG2G.csv" ;

rml:referenceFormulation ql:CSV

];

rr:subjectMap [

rr:template "file://data/HG2G.csv.ttl#{id}";

rr:class foaf:Person

];

rr:predicateObjectMap [

rr:predicate dbo:birthYear;

rr:objectMap [

a fnml:FunctionMap ;

fnml:functionValue [

rr:predicateObjectMap [

rr:predicate fno:executes ;

rr:object <java:de.uni.kl.dfki.mschroeder.tutorial.semtec.Functions.getYear>

] ;

rr:predicateObjectMap [

rr:predicate <java:parameter.predicate.integer.0> ;

rr:objectMap [ rml:reference "age" ]

]

] ;

rr:datatype xsd:int

]

].A patch consists of changes which usually update, fix or improve computer programs. However, patches can also be used to update datasets, in our case, RDF graphs.

The open source library RDF Delta implements a patching mechanism for RDF in its RDF Patch module. Since it includes old Jena dependencies, we exclude them. Version 0.8.2 is the latest version compatible with Java 8.

<dependency>

<groupId>org.seaborne.rdf-delta</groupId>

<artifactId>rdf-patch</artifactId>

<version>0.8.2</version>

<exclusions>

<exclusion>

<groupId>org.apache.jena</groupId>

<artifactId>apache-jena-libs</artifactId>

</exclusion>

</exclusions>

</dependency>

In rdflib there seems to be no library which supports patching RDF.

Suppose a knowledge engineer (KE) downloads the following subset of an RDF graph in order to edit it locally.

@prefix skos: <http://www.w3.org/2004/02/skos/core#> .

<pimo:1549008225287:24>

skos:prefLabel "Graph Embedings" ;

<pimo:thing#hasSuperTopic> <pimo:1452006681033:5> .

<pimo:1342797660296:20>

skos:prefLabel "Artificial Intelligence" .

<pimo:1452006681033:5>

skos:prefLabel "Peer-to-Peer" .

To observe what changes happen to the RDF model, an implementation of the RDFChanges interface is necessary (in our case RDFChangesCollector).

RDF patches are able to store meta data in form of header information.

During the session, the KE corrects the typo in Graph Embeddings and states the correct super topic relation.

Instead of Java code, this could be done in a GUI.

import org.seaborne.patch.changes.RDFChangesCollector;

import org.seaborne.patch.RDFPatchOps;

import org.seaborne.patch.RDFPatch;

import org.seaborne.patch.items.ChangeItem;

import org.apache.jena.graph.NodeFactory;

RDFChangesCollector collector = new RDFChangesCollector();

Graph observedGraph = RDFPatchOps.changes(model.getGraph(), collector);

Model observedModel = ModelFactory.createModelForGraph(observedGraph);

collector.header("authKey", NodeFactory.createLiteral("43538d81-4027-4106-9a5f-063405827d82"));

observedModel.removeAll(graphEmbeddings, hasSuperTopic, null);

observedModel.add(graphEmbeddings, hasSuperTopic, artificialIntelligence);

observedModel.removeAll(graphEmbeddings, SKOS.prefLabel, null);

observedModel.add(graphEmbeddings, SKOS.prefLabel, "Graph Embeddings");

//I/O

String patchSyntax = RDFPatchOps.str(collector.getRDFPatch());

System.out.println(patchSyntax);

RDFPatchStored patch = (RDFPatchStored) RDFPatchOps.read(new ByteArrayInputStream(patchSyntax.getBytes()));

List<ChangeItem> changeItems = patch.getActions();

The patch can be serialized for storing in a document or transferring to another system. Conversely, serialized patches can be parsed and accessed in-memory using Java Beans. A patch document lists Header entries and what triples are Added and what are Deleted.

H authkey "43538d81-4027-4106-9a5f-063405827d82" .

D <pimo:1549008225287:24> <pimo:thing#hasSuperTopic> <pimo:1452006681033:5> .

A <pimo:1549008225287:24> <pimo:thing#hasSuperTopic> <pimo:1342797660296:20> .

D <pimo:1549008225287:24> <http://www.w3.org/2004/02/skos/core#prefLabel> "Graph Embedings" .

A <pimo:1549008225287:24> <http://www.w3.org/2004/02/skos/core#prefLabel> "Graph Embeddings" .

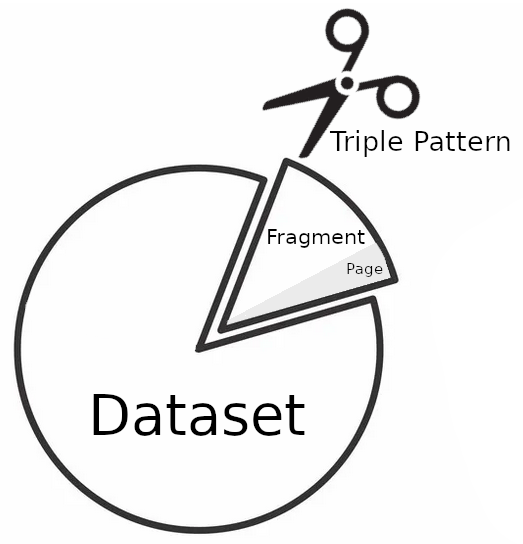

Performing complex SPARQL queries on a single server can lead to high server costs, low availability and bandwidth. To redistribute the load between clients and servers, a solution is to only allow querying fragments (isolated, incomplete parts of an RDF graph), so-called Linked Data Fragments. The basic idea is that only Basic Graph Patterns (BGP) (so-called Triple Patterns) are evaluated by the server, while the client filters, joins, etc. the results. BGPs have the form:

To implement a Triple Pattern Fragments servers, developers can consult the unofficial draft of the Triple Pattern Fragments specification. Given a dataset hosted on a server, a (possibly paginated) fragment is an RDF document response to a triple pattern request by a client. A fragment response basically consists of two parts:

To implement a server, the micro framework Spark is used in the example below. Of cause any HttpServlet implementation can alternatively be used, for example Jetty.

<dependency>

<groupId>com.sparkjava</groupId>

<artifactId>spark-core</artifactId>

<version>2.9.3</version>

</dependency>

A Triple Pattern Fragments server has to provide only one HTTP GET route to let clients query fragments. The major part of the source code is about forming the fragment's meta data. Since meta data is passed in a separate RDF graph (data in default graph), the RDF serialization format TriG is used. Optional authentication information can be passed in the request's header.

import org.apache.jena.graph.Node;

import org.apache.jena.query.Dataset;

import org.apache.jena.query.DatasetFactory;

import org.apache.jena.rdf.model.Model;

import org.apache.jena.rdf.model.ModelFactory;

import org.apache.jena.rdf.model.Property;

import org.apache.jena.rdf.model.Resource;

import org.apache.jena.riot.Lang;

import org.apache.jena.riot.RDFDataMgr;

import org.apache.jena.sparql.util.NodeFactoryExtra;

import org.apache.jena.sparql.vocabulary.FOAF;

import org.apache.jena.vocabulary.RDF;

import org.apache.jena.vocabulary.RDFS;

import org.apache.jena.vocabulary.VOID;

import spark.Request;

import spark.Response;

import spark.Spark;

public static void main(String[] args) {

Spark.port(8081);

Spark.get("/fragment", TriplePatternFragments::getFragment);

Spark.awaitInitialization();

}

private static Object getFragment(Request req, Response resp) {

//meta data

Model metadata = ModelFactory.createDefaultModel();

Resource metadataGraph = metadata.createResource(req.url() + "#metadata");

Resource fragment = metadata.createResource(req.url());

Resource hydraSearch = metadata.createResource();

Map<String, Property> hydraMap = new HashMap<>();

hydraMap.put("s", RDF.subject);

hydraMap.put("p", RDF.predicate);

hydraMap.put("o", RDF.object);

//the primary topic of the meta data graph is the fragment

metadata.add(metadataGraph, FOAF.primaryTopic, fragment);

Resource dataset = metadata.createResource("http://localhost:8081/fragment#dataset");

metadata.add(dataset, RDF.type, VOID.Dataset);

metadata.add(dataset, RDF.type, Hydra.Collection);

//the fragment is a subset of the whole dataset

metadata.add(dataset, VOID.subset, fragment);

//explain how the dataset can be searched

metadata.add(dataset, Hydra.search, hydraSearch);

//explain the template to search

metadata.add(hydraSearch, Hydra.template, "http://localhost:8081/fragment{?s,p,o}");

//map URL query param keys to RDF properties

for(Entry<String, Property> entry : hydraMap.entrySet()) {

Resource mapping = metadata.createResource();

metadata.add(hydraSearch, Hydra.mapping, mapping);

metadata.add(mapping, Hydra.variable, entry.getKey());

metadata.add(mapping, Hydra.property, entry.getValue());

}

String s = req.queryParams("s");

String p = req.queryParams("p");

String o = req.queryParams("o");

Model dataModel = evaluateTriplePattern(s, p, o);

//meta data about the fragment

metadata.add(fragment, RDF.type, Hydra.PartialCollectionView);

//a fragment's subset is itself

metadata.add(fragment, VOID.subset, fragment);

long totalTriples = dataModel.size();

metadata.addLiteral(fragment, VOID.triples, totalTriples);

metadata.addLiteral(fragment, Hydra.totalItems, totalTriples);

Dataset ds = DatasetFactory.create();

ds.getDefaultModel().setNsPrefix("hydra", Hydra.NS);

ds.getDefaultModel().setNsPrefix("rdf", RDF.uri);

ds.getDefaultModel().setNsPrefix("void", VOID.NS);

//graph about meta data

ds.addNamedModel(metadataGraph.getURI(), metadata);

//default graph is data graph

ds.setDefaultModel(dataModel);

//write in trig format

StringWriter trigSW = new StringWriter();

RDFDataMgr.write(trigSW, ds, Lang.TRIG);

resp.type("application/trig;charset=utf-8");

resp.header("Access-Control-Allow-Origin", "*");

return trigSW.toString();

}

The evaluateTriplePattern method is the procedure

which actually evaluates a triple pattern (s,p,o) and returns

matching RDF triples.

Since object o can also be a literal (indicated by a starting quote), we have to parse it using regular expression.

import java.util.regex.Matcher;

import java.util.regex.Pattern;

private static final Pattern STRINGPATTERN = Pattern.compile("^\"(.*)\"(?:@(.*)|\\^\\^<?([^<>]*)>?)?$");

private static Model evaluateTriplePattern(String s, String p, String o) {

Model dataModel = ModelFactory.createDefaultModel();

Resource subject = null;

Property predicate = null;

RDFNode object = null;

if(s != null) {

subject = dataModel.createResource(s);

}

if(p != null) {

predicate = dataModel.createProperty(p);

}

if(o != null) {

if(o.charAt(0) == '\"') {

Matcher matcher = STRINGPATTERN.matcher(o);

if (matcher.matches()) {

String label = matcher.group(1);

String langTag = matcher.group(2);

String typeURI = matcher.group(3);

if (langTag != null)

object = dataModel.createLiteral(label, langTag);

else if (typeURI != null)

object = dataModel.createTypedLiteral(label, typeURI);

else

object = dataModel.createLiteral(label);

} else {

throw new RuntimeException("Literal parsing failed for " + o);

}

} else {

object = dataModel.createResource(o);

}

}

//TODO match subject, predicate, object and fill dataModel

return dataModel;

}

A similar implemention can be archived with flask and rdflib. There is also a Python implementation on GitHub.

An empty repsonse looks like the following (see also a DBpedia example).

@prefix hydra: <http://www.w3.org/ns/hydra/core#> .

@prefix void: <http://rdfs.org/ns/void#> .

@prefix rdf: <http://www.w3.org/1999/02/22-rdf-syntax-ns#> .

@prefix xsd: <http://www.w3.org/2001/XMLSchema#> .

# extra graph for meta data

<http://localhost:8081/fragment#metadata> {

# the primary topic of the meta data graph is the fragment

<http://localhost:8081/fragment#metadata>

foaf:primaryTopic <http://localhost:8081/fragment> .

# information about the dataset

<http://localhost:8081/fragment#dataset>

a void:Dataset , hydra:Collection ;

void:subset <http://localhost:8081/fragment> ;

# how the dataset is queried

hydra:search [

hydra:template "http://localhost:8081/fragment{?s,p,o}" ;

hydra:mapping [ hydra:property rdf:subject ;

hydra:variable "s"

] ;

hydra:mapping [ hydra:property rdf:predicate ;

hydra:variable "p"

] ;

hydra:mapping [ hydra:property rdf:object ;

hydra:variable "o"

]

] .

# information about the fragment

<http://localhost:8081/fragment>

a hydra:PartialCollectionView ;

void:subset <http://localhost:8081/fragment> ;

void:triples "0"^^xsd:int ;

hydra:totalItems "0"^^xsd:int .

}

A JavaScript-based client is Comunica which can be tried online (e.g. to query the localhost server).